Af Sine N. Just

Kultur- og kommunikationsstudier af digitaliseringens omorganisering af hverdagen

Antologien Digitale liv. Brugere, platforme og selvfremstillinger, der er redigeret af Rikke Andreassen, Rasmus Rex Pedersen og Connie Svabo, samler en gruppe forskere om studiet af forskellige måder, hvorpå digitalisering påvirker menneskers liv og hverdag. De fleste af bidragyderne har fagligt hjemme på Institut for Kommunikation og Humanistisk Videnskab, og nogle af os (inklusiv undertegnede) er medlemmer af Digital Media Lab. Vi er altså alle kultur- og kommunikationsforskere med interesse for digitalisering, men snarere end et snævert fokus viser antologien feltets emnemæssige bredde, metodiske spændvidde og teoretiske eklekticisme. I denne omtale vil jeg give et overblik over de emner, metoder og teorier, som bogen rummer, og dermed en forsmag på, hvad man finder, hvis man dykker ned i den.

Den digitale hverdag

Hverdagen er blevet digital i en sådan grad, at vi ikke tænker over det – indtil en del af den digitale infrastruktur bliver synlig via et ’glitch’ i matrixens ellers så fejlfrie flow. Vi ser vores afhængighed af det digitale, når internettet ’lacker’, som børnene råber med lige dele vrede og resignation. Når iPhonen går i stykker, og det bliver umuligt at holde styr på aftaler, at tage billeder, at lytte til musik og at komme i kontakt med venner, for nu blot at nævne nogle af de funktioner, der i dag er samlet i en smart phone. Eller når indkøbenes stregkode ikke kan scannes, og man må vente på, at en af supermarkedets stadigt færre ansatte dukker op.

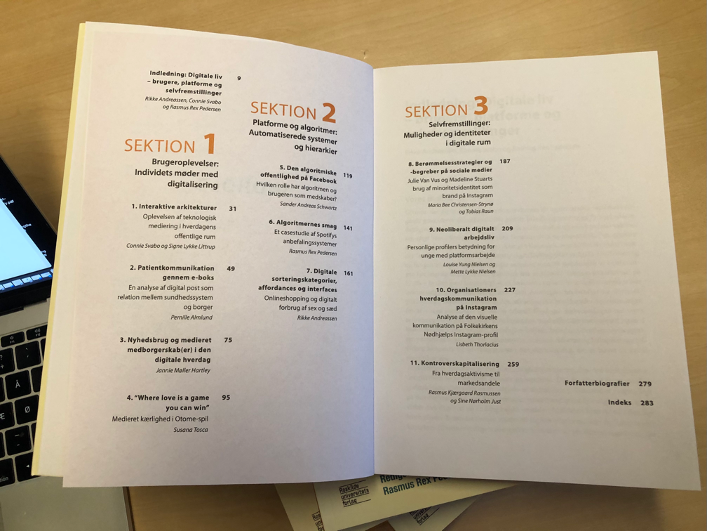

Bogens undertitel angiver tre overordnede områder eller tematikker inden for denne omsiggribende udvikling, som bogen også er struktureret efter. For det første ’brugere’. Hvad betyder det for os, som individer og fællesskaber, at vi i stadigt stigende grad defineres i og med de digitale teknologier, vi benytter os af? Når vi som borgere organiseres i digitale offentligheder, finder vores nyheder på nettet og modtager information fra ’det offentlige’ i e-boksen? Når byens rum bliver digitale og vores færdsel i dem styres af vores evne eller vilje til at interagere med teknologierne omkring os? Når computerspil florerer i stadigt flere undergenrer, og man fx kan øve sig på kærlighed via spillene?

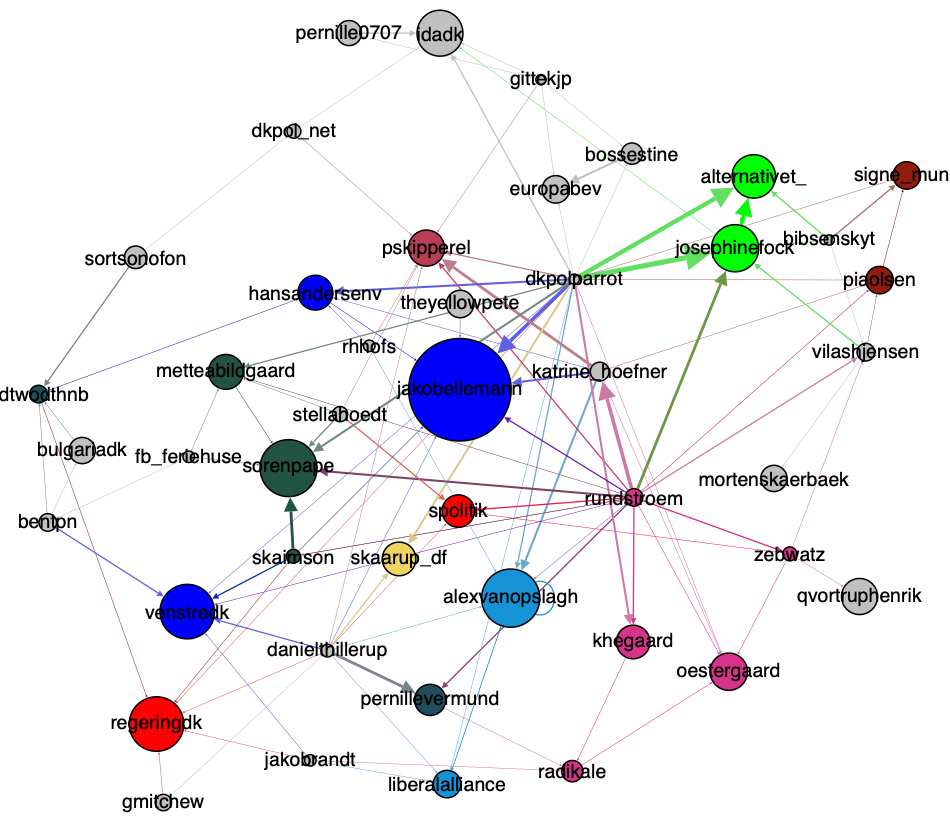

Det andet tema er ’platforme’. Her stilles især skarpt på den algoritmiske opbygning af digitale infrastrukturer; hvordan former de underliggende algoritmer brugernes handlemuligheder på fx Facebook, Spotify og dating apps? Hvad betyder det, at interaktionen mellem bruger og algoritme har en tendens til at virke forstærkende på brugerens smag? Altså, når man både selv kan sætte sine præferencer og bliver tilbudt mere af den type indhold, man efterspørger, er det på den ene side effektiv målretning, men på den anden side også spildte muligheder. ’People who likes this, also liked…’-logikken kan være en god måde at målrette indhold, men det kan også føre til ensporede ekkokamre, til øget forudsigelighed og kontrol.

Endelig fokuserer det tredje tema ’selvfremstillinger’ på forskellige brugergruppers anvendelse af de teknologiske muligheder til identitetsdannelse og/eller selvpromovering. Hvad enten det drejer sig om influencere, der bliver berømte på at ’være sig selv’, prekære arbejdere, der søger beskæftigelse via arbejdsplatforme, eller professionelle organisationer, der bruger digital kommunikation som et middel til realisering af strategiske mål, så formes individuelle og kollektive identiteter i stadigt stigende grad af de digitale platformes mulighedsrum, og i denne sidste del af bogen fokuseres der på, hvordan disse mulighedsrum konkret udnyttes.

Digitale metoder?

Der er i antologien eksempler på, at den omsiggribende digitalisering også skaber nye forskningspraksisser i form af digitale metoder. Fx anvender Sander Andreas Schwartz i sit kapitel om algoritmisk offentlighed ’walk-through’-metoden til at gennemgå Facebooks design og funktionalitet, og i kapitlet om Spotifys anbefalingssystemer benytter Rasmus Rex Pedersen sig af en ’kritisk læsning af algoritmen’, der muliggør en nærmere undersøgelse af, hvordan anbefalingerne egentlig fungerer.

Det er dog karakteristisk, at ingen af kapitlerne arbejder med digitale metoder til indsamling og analyse af ’big data’. I stedet er fokus på kvalitative studier, ofte med særligt blik for brugeren, hvad enten det er via kvalitative interviews, protokolanalyser eller deltagerobservationer af brugeroplevelser eller gennem studier af individuelle og kollektive aktørers digitale kommunikation. Antologien viser dermed, at digitaliseringen ikke har overflødiggjort klassiske humanistiske og samfundsvidenskabelige tilgange, men at det derimod i høj frad giver mening at studere de nye fænomener med velkendte metoder.

Digital humaniora

Dermed bidrager kapitlerne med dybe og detaljerede indsigter i forskellige former for sociomateriel meningsdannelse; de forstår og forklarer ’digitale liv’ som måder, hvorpå teknologisk betingede handlerum opstår og udnyttes. Dette må være en af humanioras fornemste opgaver i dag; at forstå, hvad det vil sige at være menneske i en digital tidsalder – hvordan vi former vores digitale redskaber og hvordan de former os. Antologien bidrager med netop sådanne forståelser gennem inddragelse af en bred vifte af teoretiske perspektiver, modeller og begreber. Fra aktørnetværksteoretiske arrangementer af humane og non-humane aktører over digitale affordances til realiserede handlinger – og deres konsekvenser. Og fra kontrol, normalisering og forstærkning af eksisterende ideologiske rammer, fx via markedsliggørelsen af shitstorms, til kreativ udnyttelse af de etablerede rammer til afsøgning af mulige alternativer, fx gennem minoritetssubjekters selvkommercialisering.

Er man konkret interesseret i et eller flere af de temaer, som behandles i Digitale liv, eller mere generelt optaget af spørgsmålet om, hvordan teknologiske udviklinger påvirker menneskelige fællesskaber, er der masser af inspiration at hente i antologien. Som bagsideteksten lover, kan bogen ”læses af alle, der interesserer sig for den digitalisering, der i stigende grad præger vores samfund og digitale liv”.

By Mette Wichmand

By Mette Wichmand

By Jannie Møller Hartley

By Jannie Møller Hartley